The goal of this lab was to introduce geometric correction. This lab covered two major types of geometric correction. These correction types are usually used for satellite images as part of the preprocessing activities prior to the extraction of biophysical sociocultural information from satellite images. During the process of this lab, a United States Geological Survey 7.5 minute digital raster graphic image of the Chicago Metropolitan Statistical Area was used to collect ground control points (GCPs). Additionally, a corrected Landsat TM image for eastern Sierra Leone was used to rectify a geometrically distorted image.

Methods:

Erdas Imagine was used to complete this lab.

Part One: Image-to-Map Rectification

In the first part of the lab, a USGS 7.5 minute digital raster graphic image (Chicago_drg.img) of the Chicago region was added to one viewer and then another Chicago region image (Chicago_2000.img) was added to the second viewer. The Chicago_2000.img was highlighted because that was the image that was going to be rectified. Next, Multispectral was selected and the Control Points were clicked. This activated the Multipoint Geometric Connection window. The next task is to start adding the GCPs to the images, but the default GCPs need to be deleted first. In this process, a first order polynomial transformation was performed so there needed to be at least three pairs of GCPs. However, four pairs were added to ensure that the output image would have a good fit. To perform this task, the Create GCP tool was selected and the first GCP points were added to the Chicago_drg.img and the Chicago_2000.img. To display the reference coordinates easier over the images, the color of the points was changed to purple. Three more GCP points were added to the image to have a total of four. To ensure the accuracy of the GCPs, the Root Mean Square (RMS) error should be 2.0 or below for part one (Figure 1). The GCPs were repositioned multiple times to try and achieve total accuracy. Once the RMS error was below 2.0, the Display Resample Image Dialogue tool was selected to perform the geometric correction. All of the parameters were left as default in the resample image window and the tool was run.

|

| Figure 1: RMS Error Below 2.0 With 4 GCPs |

Part Two: Image-to-Image Registration

In part two of the lab, two new images were added to separate viewers. One of the images (sierra_leone_east1991.img) has serious geometric distortion. The second image (sl_reference_image.img) is an already referenced image. The Swipe tool was used to show the contrast between the referenced image and the geometrically distorted image by moving the slider tab left and right (Figure 2).

|

| Figure: Swipe Tool Showing the Contrast Between the Referenced Image and the Geometrically Distorted Image |

|

| Figure 3: RMS Error Below 0.5 With 12 GCPs |

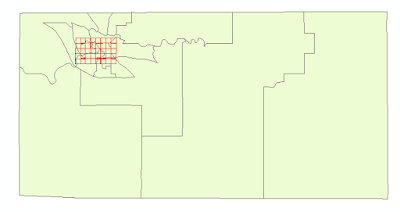

Figure 4 is the geometrically corrected image from part one after using the Display Resample Image Dialogue tool. This geometrically corrected image was the result of a USGS 7.5 minute raster digital raster graphic image of the Chicago region and then another Chicago region image. There was minimal distortion on the Chicago image so only a first order polynomial transformation was needed. A nearest neighbor resampling method was used used to complete the spatial interpolation.

|

| Figure 4: Result of Geometrically Corrected Image Part One |

|

| Figure 5: Result of Geometrically Corrected Image Part Two |

|

| Figure 6:S wipe Tool Showing the Contrast Between the Original Referenced Image and the Geometrically Corrected Image |

Sources:

Satellite images from Earth Resources Observation and Science Center, United States Geological Survey Digital raster graphic (DRG) from Illinois Geospatial Data Clearing House.